With the evolving world of big data, traditional relational databases aren’t sufficient to meet the needs of organizations that want to extract value from their data. The need to capture, store and efficiently process large data volumes continues to drive organizations to adopt NoSQL databases. The explosion of big data calls for the adoption of technologies that can scale to millions of nodes, provide very high throughput and sub-second latency.

Thus, interest in NoSQL products has been on a dramatic rise. Many NoSQL scalable and highly distributed data stores are available today, each offering a different balance of operational simplicity, scalability and performance, cost, and data consistency guarantees. Two of the most popular NoSQL databases are Hadoop and MongoDB.

This article compares Hadoop vs MongoDB in their core features, use cases, performance, scalability, operational simplicity, cost, and real-world deployments.

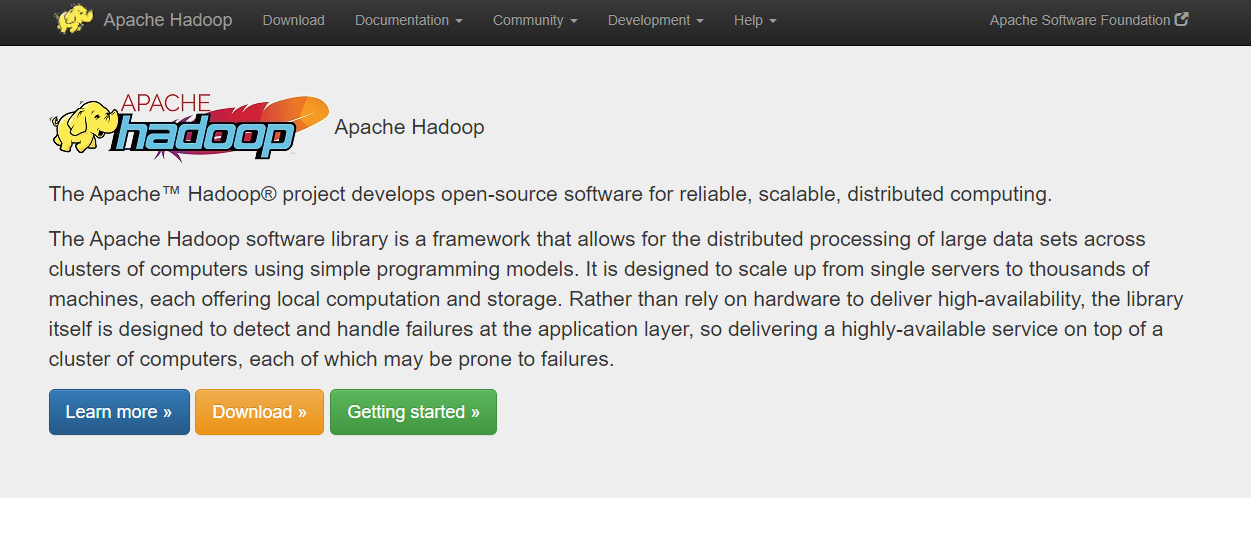

What is Hadoop, and How Does it Work?

Apache Hadoop is open-source software that enables distributed processing of big data across clusters of machines using simple programming techniques. Hadoop can scale up from a single server to thousands of machines, offering local computation and storage.

The ability to process structured and unstructured data across commodity servers make Hadoop an ideal platform for building data-intensive distributed applications.

Hadoop has four major components in its Core Modules:

- Hadoop Common

- Hadoop Distributed File System (HDFS)

- YARN

- Hadoop MapReduce

Key Features of Hadoop

Apache Hadoop provides some distinctive features that were instrumental in its growing popularity:

1. Scalability

Hadoop is highly scalable, supporting enormous clusters of machines and petabytes of data. It also provides a reliable storage platform for big data by replicating the data across multiple nodes in a group. This feature ensures that failure won’t impact access to your data, and if a server goes down, the data is still available.

2. Fault Tolerance

Hadoop uses a unique “failure is not an option” approach to make sure that the framework automatically handles all nodes and server failures. It provides data redundancy by replicating the data across multiple servers, so even if one or two servers go down, your data will still be accessible.

3. Parallel Processing

Hadoop processes data in parallel on large clusters of commodity hardware, using simple programming techniques like map and reduce functions. This module enables it to solve computationally expensive problems (such as machine learning algorithms) at a breakneck speed.

4. Open Source

Apache Hadoop is open-source and free to use. There’s no licensing cost involved. This feature makes it an economic platform for big data analytics, especially if you’re not processing terabytes of data daily.

Advantages of Using Hadoop

As an open-source framework, Hadoop is a flexible platform that supports a variety of programming languages. It’s easy to use and can support various applications. Along with these benefits, Hadoop also offers:

Cost-effectiveness:

Hadoop uses commodity hardware for storage, much cheaper than the enterprise-grade hardware required by other big data analytics tools.

Varied Data Sources:

Hadoop is designed to process both structured and unstructured data. This feature enables you to analyze all your data in one place, regardless of type.

Powerful Analytics:

Data processing and analytics tools in Hadoop are highly flexible and powerful. They can analyze large chunks of data to identify hidden patterns and trends that no human analyst can spot.

Low Data Network:

Hadoop is adept at handling data transmission. It can transfer petabytes of data to remote servers and handle real-time processing. Instead of processing massive data sets in one shot, it breaks them into smaller chunks and processes them simultaneously.

Compatibility:

Hadoop is compatible with a wide range of big data tools and technologies, ensuring that you can use the right tool for each job. Emerging big data tools such as Flick and Spark can integrate with Hadoop without any issues.

Disadvantages of Using Hadoop

Despite the numerous benefits of using Hadoop for big data analytics, it has some limitations you should consider before choosing this platform.

Not suitable for small data sets:

Since Hadoop uses commodity hardware, it can’t process small data sets efficiently. It’s designed for large-scale operations and only processes big data on a cluster of machines. It’s high-capacity design makes it difficult to scale down.

Security concerns:

Hadoop lacks encryption and access authentication features. This makes it difficult to manage and control access to your data; this problem is more pronounced at the storage network levels.

Only supports batch processing:

Hadoop focuses on batch processing of data sets, which can be time-consuming. It’s not ideal for real-time data processing, which requires near-real-time response times.

Stability issues:

While developers consider Hadoop as a stable framework, there are times when it fails to perform as expected. In most instances, it’s because users don’t update the framework using patches and fixes.

High-up processing:

Data in TB or PB is considerably large, which makes read/write operations in Hadoop slow. Hadoop reads and writes data on disks, which makes in-memory calculations a little slower.

What is MongoDB, and How Does it Work?

MongoDB is an open-source document database that stores data using JSON-like documents with schemas. In

other words, MongoDB is a NoSQL database that’s easier to fit into an object-oriented programming model. MongoDB provides easy scalability, high availability, and auto-sharding.

MongoDB works by storing all of the data in a table as JSON objects. Before storing anything, MongoDB adds a document-level BSON (binary JSON) type that specifies each object’s style and shape. Each record has a unique identifier and consists of key-value pairs:

- Key: A string that uniquely identifies an element or set of components.

- Value: The content of the component or group of elements specified by their key.

- Indexes: A collection of data structures used to access records within a database, similar to how an index finds specific passages in a book.

- Queries: Each query is represented as an object with a type field, which identifies the operation being carried out.

Key Technical Features of MongoDB

MongoDB has compelling features that make it stand out from the crowd of other NoSQL databases:

1. Ad hoc Queries

MongoDB enables the execution of any query on-demand without specifying what kind of data you want to query. Ad-hoc queries are essential for big data as they provide the ability to change the query as needed.

The results may be different with each execution, depending on the variables of the query. Developers can update, delete, and add data from any document in a collection. This flexibility allows you to make changes on the fly without designing a complicated schema ahead of time for each application or project you work on.

2. Auto-Sharding

MongoDB makes the task of distributing data across servers much easier by automatically redistributing data as you add or remove machines from your cluster. This feature, called auto-sharding, means that you don’t have to worry about migrating your data to new devices manually.

Sharding in MongoDB allows for horizontal scaling of databases, which means you can add more machines to your distributed database to increase its capacity. The collection of documents remains homogeneous across the whole cluster.

3. Load Balancing

With large data sets, it’s crucial to have a database that can scale to accommodate the increasing load. MongoDB’s sharding feature makes it, so you don’t have to worry about limitations when adding more machines and helps remove the complexity of building a scalable database.

Load balancing isn’t just about scale; it’s also about ensuring that your data and queries are being executed in the right place. MongoDB builds upon auto-sharding and replication to create an environment where queries get sent across the cluster to the machines with the most relevant data for processing.

4. Replication

Replication is one of the critical features of MongoDB as it allows your data to be available even as servers go down. Replication makes sure that each replica contains up-to-date data and is an integral part of any high availability distributed system. Sometimes servers crush or lose their data, which is why replication is a crucial feature to have in your database.

As you add or remove nodes from your cluster, replication makes sure that all authorized users have access to the most up-to-date information about the state of the cluster. With MongoDB, you don’t have to worry about replicating data since it’s all taken care of by the database itself.

5. Indexing

It’s not a secret that many technical support teams spend a lot of time either trying to tune their databases or fixing problems with them. As the volume, variety, and velocity of data increases, finding the needle in the haystack becomes increasingly tricky.

MongoDB provides rich support for secondary and compound indexing, which can come in handy when using queries that rely on lookups by multiple keys. For example, when querying for records that contain fields like “name” and “address,” the database will use the secondary index created by “name.” In a basic sense, having an index speeds up the lookup process.

See how MongoDB compares to other tools:

Advantages of MongoDB

MongoDB provides good support for functionalities like auto-sharding, load balancing, and replication. Below are some of the advantages of using MongoDB.

Flexibility:

The MongoDB document-oriented model is flexible which allows for quick development, easy prototyping, and testing of applications. Creating multiple schemas for different applications or projects is also easy.

Friendly design:

MongoDB has a friendly and flexible design which makes learning the database and creating new applications easy. The design is built for change rather than forcing you to change.

Code native data access:

MongoDB is more than just a big document store; it’s an open-source NoSQL database with data structures at its core. This makes working with key-value and hierarchical documents straightforward. You can access data from any language, and there’s already a rich library of community-built tools which you can use.

Scalability:

Using shards makes it possible to add more machines to a cluster, and since each shard contains all the relevant information, it’s easy to scale your database. Horizontal scalability, for that matter, is one of the most compelling reasons to use MongoDB.

Powerful query language:

MongoDB provides a flexible and powerful query language that allows you to find, group, and sort data based on certain fields or conditions. To make querying even more powerful, MongoDB provides the aggregation framework that allows you to do calculations across your data.

Disadvantages of MongoDB

With the advantages of MongoDB, there must be a few disadvantages as well. Below are some of the disadvantages of using MongoDB.

Data duplications:

There are chances of data duplications in MongoDB since it uses a sharding architecture. This may result in a non-optimal allocation of resources to shards that contain large chunks of data.

High memory usage:

MongoDB has high memory usage, which might have an impact on the overall performance of your application. This might be an issue when using applications that are built for low-memory environments.

Lack of support for joins:

MongoDB doesn’t provide any support for joins, making it difficult to join data from different collections in different databases. Users must manually write code to do the joining.

Key Differences between Hadoop and MongoDB

Before we delve further into the technical differences between Hadoop vs. MongoDB, it’s important to note that MongoDB isn’t a database.

What does this mean?

While Hadoop is a platform in the traditional sense, it provides a foundation for developers to build their databases. MongoDB is an “embedded DB,” which means that you can run metrics or queries against it or use it as the basis for your application-specific database.

Below is a concise list of the key differences between Hadoop and MongoDB.

1. Language

Hadoop is written in Java, and it makes extensive use of the map-reduce method for processing data. You can access HDFS using Hadoop’s native APIs or any language that supports the Hadoop streaming API, such as Python or Ruby.

MongoDB, on the other hand, is a C++-based database. Developers can work with it using any language that supports everyday interactions, such as Python or Java. Other popular programming languages include Ruby, JavaScript, and PHP.

2. Data Format

Hadoop stores data in its custom format, and this requires a process known as serialization before you can load it into HDFS. Serialization is converting an object into a stream of bytes so that it can be stored or transmitted.

On the other hand, MongoDB stores information in BSON (binary JSON) files, which are typically smaller than JSON documents. BSON supports all the data types you would expect to find in a relational database, including integers, strings, binary, and dates. It doesn’t have any particular limitations on its size or the number of fields for objects.

3. RDBMS vs. NoSQL

Hadoop is not a relational database. It’s not designed to emulate the features you’d find in traditional RDBMS systems, such as ACID transactions or joins.

MongoDB is also not an RDBMS like MySQL or PostgreSQL. However, it does use some of its concepts (like indexes) and can plug into RDBMS systems through aggregation pipelines, which are tools that MongoDB provides.

4. Framework

Hadoop consists of several different components, such as HDFS and YARN. Developers can use these tools to build their applications. The Hadoop framework also provides mappers and reducers for working with data in map-reduce jobs. When working with Hadoop, you can’t directly access HDFS, YARN, Map-Reduce, and Hadoop in common as these are the core components of the framework.

MongoDB, on the other hand, provides a rich framework for developers to access and query data. Data aggregation is a core feature, and it supports replication and sharding. While MongoDB also relies on map-reduce for data processing, developers don’t have to write mappers or reducers for their queries.

5. Hardware Cost

You’ll have to spend more on hardware since you’ll need to use additional servers and environments when working with Hadoop. Besides, Hadoop is a group of interrelated projects rather than a single tool. Such a broad framework increases the complexity of your infrastructure, which may also increase the time and cost associated with deploying it.

MongoDB is a single-tier database that doesn’t need any additional servers. As a result, you won’t have to take on the expense of setting up an additional server or environment. MongoDB also provides sharding and replication, which can help you scale your application easily to meet demand.

6. Popularity

The popularity contest between Hadoop and MongoDB is interesting. It’s difficult to say which is more popular since both have millions of users around the world. Not that this should be your only criteria in evaluating a database, but it is one of the most important factors to consider.

As of November 2021, MongoDB was the most popular among the two. However, the criteria by which it achieved this level of popularity is slightly different since both are popular in their own right. The website states that it doesn’t measure the number of installations or how IT systems use a database.

Instead, it measures the number of mentions on websites, general interest, job offers, and relevance on social media. This method of setting popularity can’t be considered scientific; however, it does provide some insight.

Decisively, I can term MongoDB as popular in providing solutions for real-time data needs, while Hadoop is popular in terms of its use for batch processing.

Hadoop vs MongoDB (FAQs)

Question: Does MongoDB use Hadoop?

Answer: Combining Hadoop and MongoDB provides organizations with the ability to store data in real-time, process it efficiently, and derive business value. MongoDB’s modern approach can help companies do all this better than using Hadoop alone.

Question: Can I use MongoDB for big data?

Answer: You can use it for big data. It has the horizontal scalability and support for high-availability applications that you need. With MongoDB, you’ll be able to use batch processing as well as real-time analytics capabilities along with a consistent development experience.

Question: Can MongoDB replace Hadoop?

Answer: MongoDB can fill in for Hadoop when it comes to both batch processing and real-time analytics. You can use MongoDB to store huge volumes of data, offer a high level of concurrency, and provide support for an unlimited number of users per database instance.

Conclusion

If your business needs a solution for real-time data processing, MongoDB is the right tool for the job. Hadoop, on the other hand, is ideal if you have complex business intelligence or analytics requirements that require batch processing.

More research and analysis is required to determine the best approach for your business. To make an informed decision, you should consider all factors before deciding which technology to use. Your choice of a data platform not only depends on how it works but also on other criteria such as performance and cost. Looking at the bigger picture, MongoDB is the clear winner as it offers superior features and has received positive reviews from entities who have used it.

- CISSP vs CISM: Which Certification is Right for You? - December 11, 2021

- Should I Learn C++ or Java - December 8, 2021

- C# vs C++: What’s the Difference? - November 28, 2021

No Comments